Introduction

At 3:47 AM on a Tuesday morning, the security operations center at Metropolitan Health System received an unusual alert. Their ambient clinical AI system was generating clinical notes with subtle but concerning anomalies—medication dosages that were slightly off, symptoms that didn’t quite match the audio recordings, and diagnostic codes that seemed inconsistent with the documented patient encounters.

What the security team discovered over the following weeks would fundamentally change how they thought about cybersecurity. This wasn’t a traditional data breach or malware infection. Instead, sophisticated attackers had successfully poisoned their AI training data months earlier, causing the system to gradually degrade in ways that were nearly impossible to detect through conventional monitoring.

The attack was so subtle that it took forensic AI specialists three weeks to fully understand what had happened. The attackers had introduced carefully crafted corrupted data into the training pipeline, causing the AI model to learn incorrect patterns that would occasionally manifest as documentation errors. These errors weren’t random—they were designed to systematically under-report certain symptoms and over-emphasize others, potentially affecting patient care decisions.

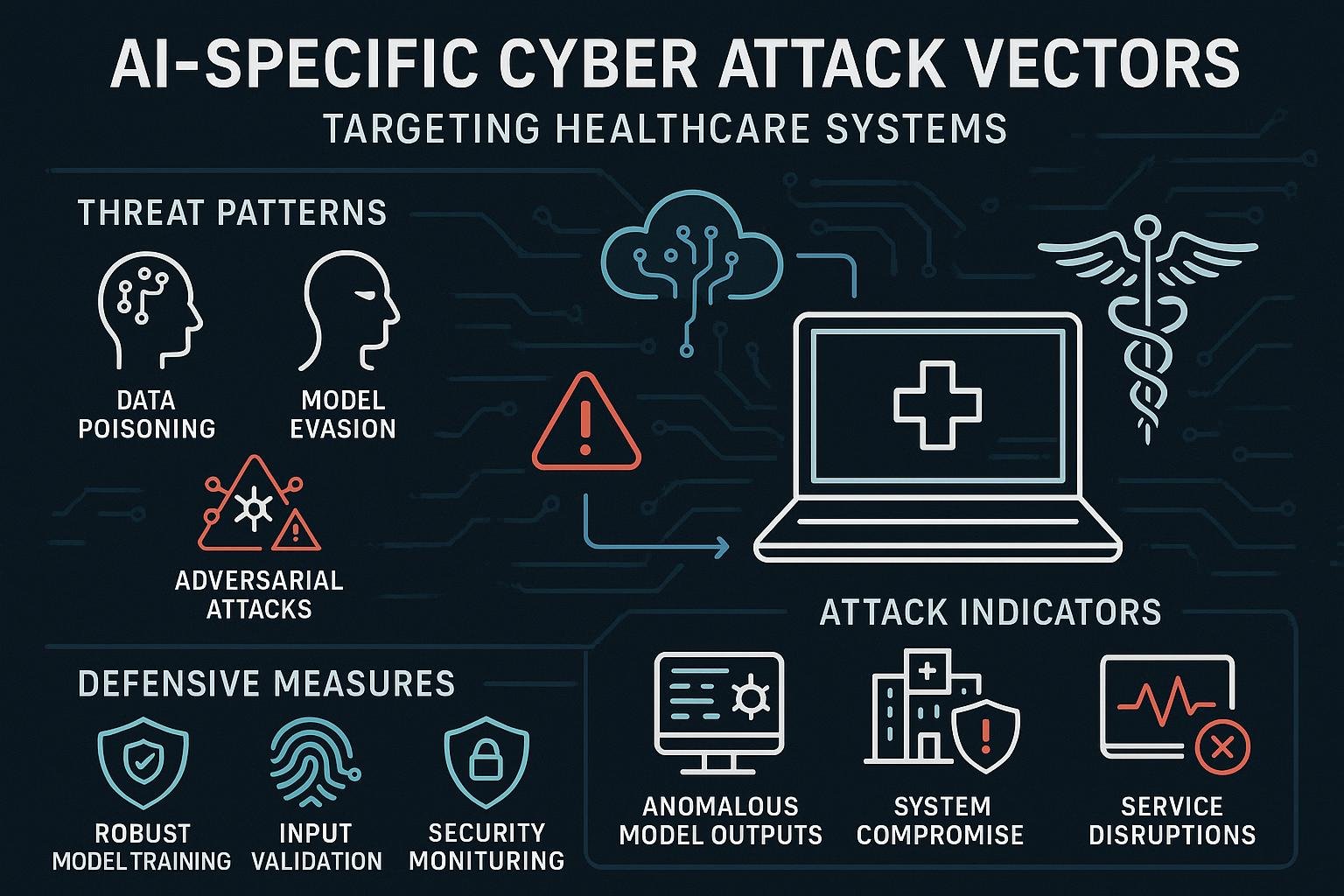

This incident represents a new frontier in cybersecurity: attacks that target not just data or systems, but the artificial intelligence models themselves. These AI-specific attacks—including data poisoning, model inversion, adversarial examples, and model extraction—represent entirely new categories of threats that healthcare organizations must understand and defend against.

Unlike traditional cyberattacks that aim to steal data or disrupt systems, AI attacks can be far more insidious. They can subtly manipulate AI behavior, extract sensitive information from AI models, or cause AI systems to make incorrect decisions—all while remaining virtually undetectable through conventional security monitoring.

For healthcare organizations deploying ambient clinical AI systems, understanding these new attack vectors isn’t just an academic exercise—it’s a critical patient safety and organizational security imperative. Today, we’ll explore the sophisticated world of AI attacks, examine real-world examples of how these attacks work, and provide practical strategies for defending against them.

Understanding the AI Attack Landscape

Before diving into specific attack types, it’s important to understand why AI systems create fundamentally new security challenges that traditional cybersecurity approaches cannot adequately address.

Why AI Systems Are Different

Traditional cybersecurity focuses on protecting data, systems, and networks from unauthorized access or manipulation. AI systems introduce new attack surfaces and vulnerabilities:

Model Vulnerabilities: The AI models themselves become targets, with attackers seeking to manipulate model behavior, extract training data, or steal intellectual property.

Training Data Dependencies: AI systems are only as good as their training data, making the data pipeline a critical attack vector that can compromise the entire system.

Inference-Time Attacks: Attackers can manipulate AI systems during operation by providing carefully crafted inputs designed to fool the model.

Emergent Behaviors: AI systems can exhibit unexpected behaviors that attackers can exploit, even when the system appears to be functioning normally.

The Healthcare AI Attack Surface

Ambient clinical AI systems present a particularly attractive target for attackers due to several factors:

High-Value Data: Healthcare AI systems process some of the most sensitive and valuable data available, making them attractive targets for both cybercriminals and nation-state actors.

Critical Decision Making: AI systems that influence clinical decisions can cause significant harm if compromised, making them attractive targets for attackers seeking to cause disruption or harm.

Complex Supply Chains: Healthcare AI systems often involve multiple vendors, cloud providers, and third-party services, creating numerous potential attack vectors.

Limited AI Security Expertise: Many healthcare organizations lack the specialized expertise needed to detect and respond to AI-specific attacks.

The Five Categories of AI Attacks

Our analysis of the AI threat landscape has identified five primary categories of attacks that healthcare organizations must defend against:

Category 1: Data Poisoning Attacks

Data poisoning attacks involve the injection of malicious or corrupted data into the training dataset used to develop AI models. These attacks can be particularly devastating because they compromise the fundamental learning process of the AI system.

How Data Poisoning Works

Training Data Manipulation: Attackers introduce corrupted data into the training dataset, causing the AI model to learn incorrect patterns or behaviors.

Backdoor Insertion: Attackers embed hidden triggers in the training data that cause the AI model to behave maliciously when specific conditions are met.

Label Flipping: Attackers change the labels associated with training data, causing the AI model to learn incorrect associations between inputs and outputs.

Feature Manipulation: Attackers subtly modify features in the training data to bias the AI model toward specific outcomes.

Real-World Data Poisoning Scenarios in Healthcare

#### Scenario 1: The Medication Dosage Attack

Attack Vector: Attackers gain access to a healthcare organization’s training data repository and systematically modify medication dosage information in historical clinical notes.

Execution: The attackers increase dosages for certain medications by 10-15% across thousands of training examples, staying below the threshold that would trigger obvious detection.

Impact: The AI model learns to recommend slightly higher dosages for these medications, potentially leading to patient harm through overdosing.

Detection Challenges: The changes are subtle enough that they don’t trigger data quality alerts, and the resulting AI behavior appears within normal variation ranges.

#### Scenario 2: The Diagnostic Bias Attack

Attack Vector: Nation-state actors target a major AI vendor’s training infrastructure to influence diagnostic patterns.

Execution: The attackers introduce training examples that systematically under-represent certain symptoms or conditions in specific demographic groups.

Impact: The resulting AI model exhibits diagnostic bias, potentially leading to missed diagnoses or inappropriate treatment recommendations for affected populations.

Long-term Consequences: The bias becomes embedded in AI models deployed across multiple healthcare organizations, affecting patient care on a large scale.

Technical Deep Dive: Data Poisoning Mechanics

To understand how to defend against data poisoning attacks, it’s important to understand the technical mechanisms involved:

“`

Data Poisoning Attack Framework:

- Target Identification

- Identify training data repositories

- Analyze data collection and processing pipelines

- Map data quality and validation controls

- Access Acquisition

- Compromise data storage systems

- Infiltrate data collection processes

- Exploit vendor relationships and supply chains

- Poison Injection

- Introduce corrupted data samples

- Modify existing training examples

- Manipulate data labels and annotations

- Persistence and Stealth

- Ensure poisoned data survives quality checks

- Maintain access for ongoing manipulation

- Avoid detection through statistical analysis

“`

Defending Against Data Poisoning Attacks

Strategy 1: Robust Data Validation and Quality Control

Implement comprehensive data validation processes that can detect anomalous or suspicious training data:

“`

Data Validation Framework:

- Statistical analysis of data distributions

- Outlier detection and anomaly identification

- Cross-validation with multiple data sources

- Automated quality scoring and flagging

- Human expert review of flagged data

“`

Strategy 2: Secure Data Pipeline Management

Protect the entire data pipeline from collection through model training:

- Implement strong access controls for training data repositories

- Use cryptographic signatures to verify data integrity

- Maintain detailed audit logs of all data modifications

- Implement data provenance tracking and verification

Strategy 3: Adversarial Training and Robust Learning

Use training techniques that make AI models more resistant to poisoned data:

- Implement robust learning algorithms that can handle corrupted training data

- Use ensemble methods that combine multiple models trained on different data subsets

- Apply adversarial training techniques that expose models to potential attacks during training

Category 2: Model Inversion and Membership Inference Attacks

These attacks attempt to extract sensitive information about the training data or determine whether specific data points were used to train the AI model.

Model Inversion Attacks

What They Are: Attacks that attempt to reconstruct training data by analyzing the AI model’s behavior and outputs.

How They Work: Attackers use the AI model’s responses to carefully crafted inputs to infer information about the training data.

Healthcare Implications: Attackers could potentially extract patient information from AI models, even when they don’t have direct access to the training data.

#### Real-World Model Inversion Scenario

The Patient Record Reconstruction Attack:

A sophisticated attacker gains API access to a healthcare organization’s ambient AI system and begins systematically querying the model with carefully crafted audio inputs. By analyzing the AI’s responses and confidence levels, the attacker is able to reconstruct partial patient conversations that were used to train the model.

Technical Process:

- Model Probing: The attacker sends thousands of carefully crafted audio samples to the AI system

- Response Analysis: The attacker analyzes the AI’s outputs and confidence scores to identify patterns

- Gradient Analysis: Using advanced techniques, the attacker analyzes how the model responds to slight input variations

- Data Reconstruction: The attacker uses this information to reconstruct elements of the original training conversations

Impact: The attacker successfully extracts fragments of patient conversations, including sensitive medical information and personal details.

Membership Inference Attacks

What They Are: Attacks that determine whether a specific data point was used to train an AI model.

How They Work: Attackers analyze the AI model’s confidence and behavior patterns to determine if specific data was part of the training set.

Healthcare Implications: Attackers could determine whether specific patients’ data was used to train AI models, potentially violating privacy even without accessing the actual data.

Defending Against Inference Attacks

Strategy 1: Differential Privacy Implementation

Add mathematical privacy guarantees to AI training processes:

“`

Differential Privacy Framework:

- Add calibrated noise to training data

- Implement privacy budget management

- Use formal privacy accounting methods

- Balance privacy protection with model utility

“`

Strategy 2: Model Output Sanitization

Limit the information available to potential attackers:

- Implement confidence score capping and rounding

- Add noise to model outputs

- Limit the granularity of available model responses

- Implement query rate limiting and monitoring

Strategy 3: Federated Learning Approaches

Train AI models without centralizing sensitive data:

- Distribute training across multiple sites

- Share only model updates, not raw data

- Implement secure aggregation protocols

- Use homomorphic encryption for model updates

Category 3: Adversarial Example Attacks

Adversarial examples are carefully crafted inputs designed to fool AI models into making incorrect predictions or classifications.

Understanding Adversarial Examples in Healthcare AI

In the context of ambient clinical AI, adversarial examples could be:

Adversarial Audio: Carefully crafted audio inputs that sound normal to humans but cause the AI to generate incorrect clinical notes.

Adversarial Speech Patterns: Specific ways of speaking or pronunciation that exploit vulnerabilities in the AI’s speech recognition.

Environmental Manipulation: Background sounds or acoustic conditions designed to interfere with AI processing.

Real-World Adversarial Attack Scenarios

#### Scenario 1: The Prescription Manipulation Attack

Attack Setup: An attacker discovers that the ambient AI system is vulnerable to specific audio frequencies that can cause speech recognition errors.

Execution: The attacker uses a small device to emit inaudible ultrasonic signals during patient encounters that cause the AI to misinterpret medication names or dosages.

Impact: The AI generates clinical notes with incorrect medication information, potentially leading to prescription errors and patient harm.

Detection Challenges: The attack is virtually undetectable to humans and may not trigger traditional security monitoring systems.

#### Scenario 2: The Diagnostic Confusion Attack

Attack Setup: Researchers discover that adding specific background noise patterns can cause the AI to misclassify symptoms or conditions.

Execution: An attacker introduces subtle audio interference that causes the AI to consistently misinterpret certain medical terms or symptoms.

Impact: The AI generates clinical notes that misrepresent patient conditions, potentially affecting diagnosis and treatment decisions.

Technical Analysis of Adversarial Examples

“`

Adversarial Example Generation Process:

- Model Analysis

- Analyze AI model architecture and behavior

- Identify potential vulnerability points

- Map input-output relationships

- Perturbation Calculation

- Calculate minimal changes needed to fool the model

- Optimize perturbations for specific outcomes

- Ensure perturbations remain imperceptible

- Attack Execution

- Apply perturbations to real-world inputs

- Monitor AI system responses

- Adjust attack parameters as needed

- Persistence and Evasion

- Avoid detection by security systems

- Maintain attack effectiveness over time

- Adapt to defensive countermeasures

“`

Defending Against Adversarial Examples

Strategy 1: Adversarial Training

Train AI models to be robust against adversarial examples:

“`

Adversarial Training Process:

- Generate adversarial examples during training

- Include adversarial examples in training datasets

- Use robust optimization techniques

- Implement certified defense methods

“`

Strategy 2: Input Validation and Preprocessing

Implement robust input validation that can detect adversarial inputs:

- Audio quality analysis and validation

- Statistical analysis of input characteristics

- Anomaly detection for unusual input patterns

- Multi-modal validation using different sensors

Strategy 3: Ensemble Defense Methods

Use multiple AI models with different architectures and training data:

- Deploy multiple models with different vulnerabilities

- Use voting mechanisms to detect inconsistent outputs

- Implement model diversity techniques

- Monitor for disagreement between models

Category 4: Model Extraction and Intellectual Property Theft

These attacks aim to steal or replicate AI models, potentially compromising intellectual property and enabling further attacks.

How Model Extraction Works

Query-Based Extraction: Attackers send carefully designed queries to the AI system and use the responses to train a replica model.

Parameter Extraction: Attackers attempt to directly extract model parameters or weights through various techniques.

Architecture Reverse Engineering: Attackers analyze AI system behavior to understand and replicate the underlying model architecture.

Healthcare-Specific Model Theft Scenarios

#### The Competitive Intelligence Attack

Scenario: A competing healthcare AI vendor systematically queries a deployed ambient AI system to extract its capabilities and training.

Process:

- Systematic Querying: The attacker sends thousands of diverse audio samples to the AI system

- Response Analysis: The attacker analyzes the AI’s outputs to understand its capabilities and limitations

- Model Replication: The attacker uses this information to train a competing AI model

- Commercial Exploitation: The attacker uses the stolen model to compete unfairly in the market

Impact: Loss of competitive advantage, intellectual property theft, and potential patient safety risks from inferior copied models.

Defending Against Model Extraction

Strategy 1: Query Monitoring and Rate Limiting

Implement comprehensive monitoring of AI system usage:

“`

Query Monitoring Framework:

- Track query patterns and frequencies

- Identify suspicious usage patterns

- Implement adaptive rate limiting

- Monitor for systematic data collection attempts

“`

Strategy 2: Model Obfuscation Techniques

Make it more difficult for attackers to extract useful information:

- Add noise to model outputs

- Implement output randomization

- Use model watermarking techniques

- Implement query-dependent response modification

Strategy 3: Legal and Contractual Protections

Implement strong legal frameworks to deter and respond to model theft:

- Comprehensive terms of service and usage agreements

- Intellectual property protection and enforcement

- Monitoring and detection of unauthorized model replication

- Legal response procedures for intellectual property theft

Category 5: Supply Chain and Infrastructure Attacks

These attacks target the broader ecosystem supporting AI systems, including cloud infrastructure, third-party services, and vendor relationships.

AI Supply Chain Vulnerabilities

Vendor Compromise: Attackers compromise AI vendors or service providers to gain access to multiple customer systems.

Cloud Infrastructure Attacks: Attackers target cloud platforms hosting AI systems to gain access to models and data.

Third-Party Library Attacks: Attackers compromise open-source libraries or frameworks used in AI development.

Hardware Attacks: Attackers target specialized AI hardware or infrastructure components.

Real-World Supply Chain Attack Scenario

#### The Vendor Backdoor Attack

Attack Vector: Nation-state actors compromise a major ambient AI vendor’s development infrastructure.

Execution:

- Initial Compromise: Attackers gain access to the vendor’s development environment through a sophisticated spear-phishing campaign

- Backdoor Insertion: Attackers insert subtle backdoors into the AI model training process

- Model Distribution: The compromised models are distributed to healthcare customers as part of routine updates

- Activation: The backdoors are activated months later, allowing attackers to manipulate AI behavior across multiple healthcare organizations

Impact: Widespread compromise of ambient AI systems across multiple healthcare organizations, potentially affecting thousands of patients.

Defending Against Supply Chain Attacks

Strategy 1: Vendor Security Assessment and Management

Implement comprehensive vendor security programs:

“`

Vendor Security Framework:

- Detailed security assessments of AI vendors

- Regular security audits and penetration testing

- Contractual security requirements and SLAs

- Incident response coordination procedures

- Supply chain risk monitoring and management

“`

Strategy 2: Model Integrity Verification

Implement technical controls to verify AI model integrity:

- Cryptographic signing and verification of AI models

- Model fingerprinting and change detection

- Behavioral testing and validation of AI models

- Continuous monitoring of model performance and behavior

Strategy 3: Defense in Depth for AI Infrastructure

Implement multiple layers of security controls:

- Network segmentation and isolation for AI systems

- Endpoint detection and response for AI infrastructure

- Privileged access management for AI system administration

- Comprehensive logging and monitoring of AI system activities

Building an AI Threat Detection and Response Program

Defending against AI attacks requires specialized detection and response capabilities that go beyond traditional cybersecurity approaches.

AI-Specific Threat Detection

Behavioral Monitoring: Monitor AI system behavior for anomalies that might indicate compromise:

“`

AI Behavioral Monitoring:

- Model output quality and consistency tracking

- Performance degradation detection

- Unusual confidence score patterns

- Unexpected model behavior identification

“`

Statistical Analysis: Use statistical methods to detect potential attacks:

- Training data quality analysis and outlier detection

- Model performance statistical process control

- Adversarial example detection algorithms

- Inference attack detection through query analysis

AI Incident Response Procedures

Incident Classification: Develop classification systems for AI-specific incidents:

“`

AI Incident Categories:

- Data poisoning incidents

- Model compromise events

- Adversarial attack detection

- Inference attack attempts

- Model theft or extraction

- Supply chain compromise

“`

Response Procedures: Implement specialized response procedures for AI incidents:

- Immediate Containment: Isolate affected AI systems and prevent further damage

- Impact Assessment: Evaluate the extent of AI system compromise and potential patient impact

- Forensic Analysis: Conduct specialized AI forensics to understand attack methods and scope

- Recovery and Remediation: Restore AI systems using clean models and data

- Lessons Learned: Update AI security controls based on incident findings

The Future of AI Attacks in Healthcare

The AI attack landscape is rapidly evolving, with new attack methods and defensive techniques emerging regularly. Healthcare organizations must prepare for increasingly sophisticated threats:

Emerging Attack Trends

Multi-Modal Attacks: Attacks that target multiple aspects of AI systems simultaneously (data, models, and infrastructure).

Automated Attack Tools: The development of automated tools that can systematically probe and attack AI systems.

AI-Powered Attacks: The use of AI systems to generate more sophisticated and effective attacks against other AI systems.

Cross-System Attacks: Attacks that leverage vulnerabilities in one AI system to compromise others in the same organization.

Preparing for Future Threats

Continuous Learning: Implement programs to stay current with emerging AI threats and defensive techniques.

Research Collaboration: Participate in industry research and information sharing initiatives focused on AI security.

Adaptive Defense: Build security programs that can quickly adapt to new types of AI attacks.

Investment in Expertise: Develop internal expertise in AI security or establish relationships with specialized security providers.

Take Action: Secure Your AI Systems Against Advanced Threats

The sophisticated nature of AI attacks requires equally sophisticated defensive strategies. Don’t wait for an attack to expose vulnerabilities in your ambient clinical AI systems.

Download our AI Threat Detection and Response Toolkit to implement comprehensive protection against AI-specific attacks:

- AI attack detection algorithms and monitoring tools

- Incident response procedures for AI security events

- Vendor security assessment templates for AI systems

- Training materials for AI threat awareness

- Technical implementation guides for AI security controls

[Download the AI Threat Detection Toolkit →]()

Need specialized AI security expertise? Our team of AI security specialists can help you assess your current vulnerabilities and implement comprehensive protection against advanced AI attacks.

[Schedule Your AI Security Assessment →]()

Stay ahead of emerging threats by joining our AI threat intelligence community, providing early warning of new attack methods and defensive strategies.

[Join the AI Threat Intelligence Community →]()

*This is Part 4 of our 12-part series on securing ambient clinical note AI systems. In our next article, we’ll explore privacy-preserving AI techniques, starting with federated learning and how it can protect patient data while enabling powerful AI capabilities.*

Coming Next Week: “Privacy by Design: Implementing Federated Learning for Ambient Clinical AI”

About EncryptCentral: We are the leading cybersecurity consulting firm specializing in healthcare AI security. Our team includes AI security researchers, healthcare cybersecurity experts, and incident response specialists who understand the unique challenges of protecting AI systems in healthcare environments.

*Concerned about AI-specific threats to your healthcare organization? Our expert team can help you understand your risks and implement comprehensive protection strategies.*

Ready to Secure Your Healthcare AI Systems?

Get our comprehensive Healthcare AI Security Assessment Toolkit—a $5,000 value, absolutely free. This toolkit includes:

- ✓ 23-Point AI Security Risk Assessment Checklist

- ✓ HIPAA Compliance Framework for AI Systems

- ✓ Incident Response Playbook for AI Security Events

- ✓ ROI Calculator for AI Security Investments